Warning: Trying to access array offset on value of type bool in /home/smarteduverse.com/blog.smarteduverse.com/wp-content/plugins/sitespeaker-widget/sitespeaker.php on line 13

Next Generation Self-Driving Vehicles and Emotions

Introduction

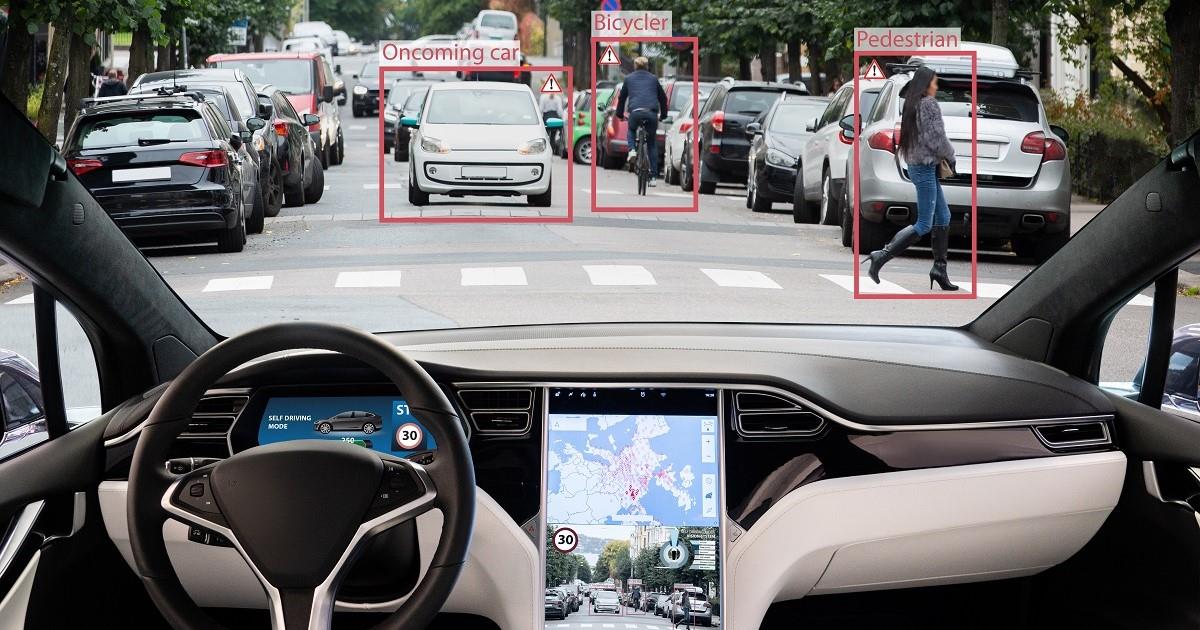

Whether we are comfortable with autonomous self-driving cars/vehicles or not, they are coming to the town. They are expected to be available for commercial use by the year 2030. The implications of such vehicles are also numerous. First, we need to re-evaluate that whether we are indeed ready for such vehicles or not. Perhaps, it may now be pertinent to re-evaluate how we design autonomous cars. After all, in the past and in the present too, the control of these vehicles has always been in the hands of a human user. While the human user can, and quite often does, make many mistakes, the general acceptability of such vehicles by the society is still quite high. However, this is all going to change when a completely autonomous artificial brain/virtual robot is going to take a driver’s seat.

One particular aspect of human drivers is their ability to connect with other drivers at an abstract level. While an artificial mind might be able to guide a vehicle through different situations, we believe that a key feature which is missing in the next generation of autonomous vehicles is the ability of the artificial mind to connect emotionally and socially not only with each other but also with other entities such as human beings. {Just as people give way to other people as a gesture of kindness or help someone in an accident, there is a strong need to empower autonomous vehicles with emotions and social norms, thereby allowing for advancing autonomous vehicle design principles further so that they are more in line with and modeled similarly to the human aspect of drivers. }

Here, we present a viewpoint in the form of a set of substantiated reasoned arguments analyzing and exploring the feasibility of incorporating human emotions and social norms in the design of the next generation of Autonomous Vehicles (AVs).

Some Evidences from Existing Literature

The detailed literature review related to the proposed scheme has been performed. The literature review has been divided into four main categories. The first category addresses the literature supporting the role of ethics in robots using theoretical debate. The second category discusses the literature that supports the role of using ethics or norms in the design of AVs but only theoretically. Then the third category discusses the state of the art literature, which has used the social norms in autonomous vehicles using a simulation approach. In the last the, the literature has been cited, which support the concept of humanizing the robots with the help of emotions.

With the passage of time, computers are getting autonomous day by day and are capable of making decisions of their own. Intelligent computer systems can get information from human, analyze it, take decisions and store that information or provide it to third parties. There is a need to check the moral values of computer decisions. Artificial intelligence scientists have suggested that there is a need to add ethics in technology, which is still lacking behind. Report summarized that from 1995 to 2015 very little efforts have been made on the implementation of ethics in robots. Past studies provide a thought that whether a robot could be a moral agent or not. In addition, researchers found that robot could be treated as a living thing that can take actions on its own decisions, and it can decide what is right and what is wrong with humans. The latest smart machines like AVs are getting smarter due to the incorporation of artificial intelligence (AI) algorithms. Furthermore, these AVs are becoming more and more autonomous in the sense that they are now taking decisions on their own using these AI algorithms. The authors suggest that as these AVs basic purpose is to serve humans, and then there is a need to equip them with ethical and social rules so that the autonomous devices like AVs can take decisions of their own that could not harm the passengers and other road commuters. It is the responsibility of researchers and programmers to devise ethics enabled control algorithms for AVs that make them more acceptable to human society. The authors argue that the incorporation of ethics of the society in which AVs are operating will help the court of law to decide the responsibility level of AV in the case of an accident. In this regard, they have proposed a mathematical model of ethical frameworks to incorporate them in the control algorithms of Autonomous vehicles. The proposed model can read the error rate for the actual and desired path of the car based on different constraints. However, the authors have not mentioned any case study that implements any of the proposed mathematical model using simulation or real field tests. Social and autonomous robots are the motivation to build social cars so that road accidents can be eliminated. Vehicle-to-vehicle (V2V) is a sub-part of intelligent transportation system (ITS) equipped with sensing technologies and wireless communication system is helpful in road accident prevention. A Group of scientists have proposed a novel concept that HUDs , Human-Computer-interaction, HCI and communicating social information between cars can provide social awareness and he named it as ‘social car’. This social car can sense the driving behavior of driver by capturing the facial expression, gesture and eye contacts of the driver. Further, the author has argued that self-efficacy and social norms can change the driver’s behavior. Social norms can be transmitted in V2V using social networks and most of the time in the form of non-verbal communication. Hence, the combination of driver and car (machine) become the cyborg so the driver of one can treat the other driver as a machine. He also

added that the “social pressure is particularly suitable to influence human driving behaviors for the better and that this aspect is still relevant in the age of looming autonomous cars”.

A complete autonomous vehicle (AV) was introduced first time in response to Defense Advanced Research Projects Agency Grand Challenges. The major challenge for AVs is that how it will take decisions at the time of the crashes. This is the key point where ethics and social norms are required for AV’s development. To address this requirement research study evaluated three ethical theories, i.e. utilitarianism, respect for persons, and virtue ethics, which help AVs to make least harming collision decision when the collision become unavoidable. Research study performed the experiments using MATLAB, and results revealed that the utilitarian system produced the lowest number of death while on the other hand, the virtue ethics system resulted in a supreme number of losses. However, the virtue ethics if fully integrated with good AI techniques can be the best ethical solution. It is suggested that these ethical theories can be implemented in AVs in different scenarios and complex environments.

To make the robots truly social and autonomous, few authors have proposed emotions as a suitable sub ingredient. However, the role of emotions in the context of designing truly social autonomous vehicles has not been explored yet. To support our idea, we have given below few of supporting evidences from the existing literature.

Human and robots can interact using the field of human and robot interaction (HRI). Social robots are getting popular as compared to non-social agents. Robots should be equipped with social and emotional intelligence to deal with HRI in a better way. Cynthia has addressed this issue by integrating the concept of intelligence in robots in order to perceive them more than a tool. In addition, the author has provided the development framework to build the social robots according to the human perspective.

The remote controlled robots have limited sensors without the ability to interpret and express the emotions. To address this issue, Cynthia has proposed three virtual agents Rea, Steve, and Kismet. Rea, the real world virtual agent, can be used to address the query of property purchasing. Rea has ability to sense the position of a person and can communicate with him/her using gestures and facial expressions. Steve, a tutoring agent, has been proposed which helps in learning the operation of various equipment in a virtual ship. For the natural interaction, a social, emotional, and expressive robot Kismet was developed using an ethological model for the organization of behavior in animals. Kismet can interpret and respond with positive or negative emotions and has the ability to learn from human and autonomous agents.

The robots that can interact with people and have the human like qualities are called the social robots. However, they cannot interact with humans in complex environments, e.g. toys and video games. To overcome this issue, Cynthia has proposed emotions enabled sociable robot Kismet which has the ability to learn from the environment and human interaction using proto-dialogue method.

The basic purpose of robots is assisting the humans. Designing the robots without keeping in mind the human model might create discomfort for humans. According to Cynthia and Brian, robots should have imitation capabilities of learning tasks like human beings. Hence, it would be interesting to explore the cognitive and emotional capabilities of humans for the better operation of robots within human society. Empathy is a type of emotion that motivates humans help each other in a better way. According to Adriana and Maja, empathetic emotion is very useful in designing socially assistive robots. A Therapist Hands-Off Robot has been designed using Davis’s empathy model by the authors as a proof of concept. Application of HRI for building socially assistive applications is a novel work. Tapus have discussed that how robots can adopt the person’s behavior for the treatment of post- stroke patients by formulating the problem as policy gradient reinforcement learning (PGRL).

Humanizing the AVs: A Possible Design Decision?

In order to formally include the social norms and emotions, the fundamental design of AVs needs to be changed because it is important since it can lead to more human-like behavior on the future roads. It is important to note that emotional action is a social action that helps to regulate and adapt other actors’ emotions and emotional expressions according to valid norms and rules. The emulation of emotions and social norms in the design of AVs can help in building a possibly more comfortable, trustworthy and collision free AVs. However, the exact mode of implementation is still debatable. The supposition guiding this method would be that the rules and norms guiding human-human interaction/communication may also be pertinent in AV design. Thus, the design principle would have a goal to introduce anthropomorphism capabilities in AVs (Fig. 1).

Fig 1. Viewpoint theme: Autonomous vehicles exhibiting different emotions based on their size and situation

How emotions enforce social Norms in Autonomous Vehicle: A scenario

To analyze the above discussion further, let us examine a conjectural interaction situation of two autonomous vehicles as shown in Fig. 2. Suppose A is a heavy autonomous truck followed by a smaller-sized autonomous vehicle B considerably less in weight but occupying the same lane. Here, one way of representing A could be to consider it as a strong influencing person having a strong social status, whereas B is a less influencing person having a weak social character. For safe driving, both actors have to follow social norms and rules. In different social societies of the world, weaker feels the emotion of fear from stronger, whereas stronger can have multiple emotions for the weaker like sympathy and pride, etc. Actor B should maintain a safe distance from actor A or actor A should practice sympathetic emotion for B to avoid the collision. Let us suppose, A decreases its speed without taking care of in-between distance from B which might lead to the collision. Suddenly another actor C (having strong social status) appears on the road. A starts feeling the fear that if it collides with B, C will have the evidence of its cruelty. According to a social norm “You will be punished for your act of crime “ generates an emotion of fear of losing its status in social society and get punished from the law enforcement institutes. Consequently, A maintains safe distance from B and avoids the collision.

What has happened here? The primary event of fretting the weaker autonomous vehicle can be defined by some appraisal theory. This is the social norm that “Any actor of any status will be punished for performing evil deed”. So actor A avoids or feels hesitation in the execution of collision scenarios in the presence of witnesses. This social norm generates the fear of being punished. It means fear forces the actor to follow the norm.

Fig 2. Collision avoidance scenario using emotions enforced social norms

Possible Issues

As the inclusion of emotions in AVs is leading to safer roads, at the same time it is raising the critical challenges of tackling the problems generated due to negative emotions like anger, greed, and sadness. In anger, AVs can violate all social and road norms and lead to the severe road casualties. If the raged AV is a heavy truck then unbearable financial and physical road loss can occur. In the same way, if AV is sad, it will not care about road rules and can break road norms leading to road accidents. The one possible solution to these challenges can be avoiding the utilization of anger and greed emotions or introducing the controlling mechanism using meta-cognition and meta- affect strategies.

Conclusion and Future Directions

Humans are going to delegate the rights of driving to the autonomous vehicles in near future. However, to fulfill this complicated task, there is a need for a mechanism, which enforces the autonomous vehicles to act both rationally and irrationally to take care of other road partners as practiced by well-behaved and cooperative human drivers. This task can be achieved by introducing emotions in combination with a rational approach in the autonomous vehicles.

To build the proof of concept of the proposed viewpoint, researchers can build an artificial society of autonomous vehicles as an analogy of human social society. In which, each AV can be further assigned a social personality having different social influence. Then the notion of emotions and social norms can be introduced to tailor the autopilot of AVs. To introduce the emotions such as the fear of collision, feeling Guilt for wrong actions, expressing gratitude in the result of good behavior by other AVs, appraisal theory based emotion computation model i.e., OCC model can be utilized. At the practical implementation level of this viewpoint, researchers can further design different experiments to identify possible strengths and weaknesses of the emotion-based strategy, compared with an equally viable emotion-free collision avoidance mechanism. For this purpose, Netlogo simulation can be employed for the real world modeling of AVs’ based artificial society. It is suggested that researchers first utilize the Intelligent Driving model, which help the AVs to act rationally in different scenarios like car following, overtaking, and obeying the traffic lights in terms of competing objectives, short travel times, self-preservation, and altruistic considerations. Then emotions based irrational approach should be simulated to see the behavior of AVs for same road scenarios.

In a conclusion, it can be argued that while humans can express numerous types of complex emotions which perhaps these cars would not need to display or “feel”, there is certainly a need to have the cars examine other human beings, objects around them and especially other cars with feelings such as compassion or even fear. We believe that this is a new horizon of research, which can become a part of collision avoidance strategy between the autonomous vehicles (AV-AV).

Your wallet will thank you for buying games Steam CD-Keys on https://store.diminutivecoin.com. Spend less money, play more games. Buy games cheaper. Visit https://store.diminutivecoin.com and update your gaming library.